## select

find_all()과 마찬가지로 매칭되는 모든 결과를 리스트로 변환

select_one()으로 하나의 결과만 반환하는 것도 가능

클래스는 마침표(.) 아이디는 샵(#)으로, 자손태그는 띄어쓰기로 표현

print(soup.select("p")) #p태그

print(soup.select(".d")) # class가 d인 태그

print(soup.select("p.d")) # class가 d인 p태그

print(soup.select("#i")) # id가 i인 태그

print(soup.select("p#i")) # id가 i인 p태그

print(soup.select("body p")) #body의 자손인 p 태그

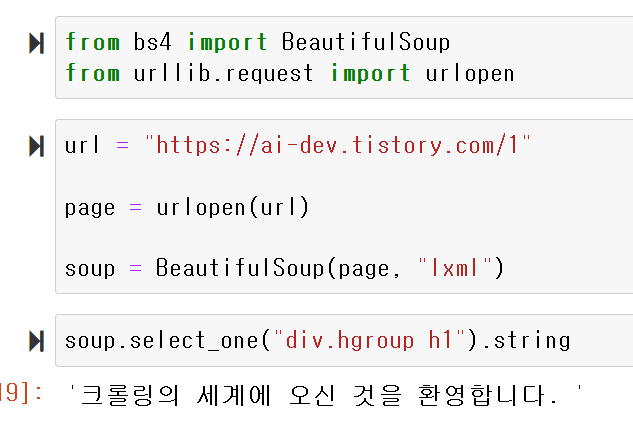

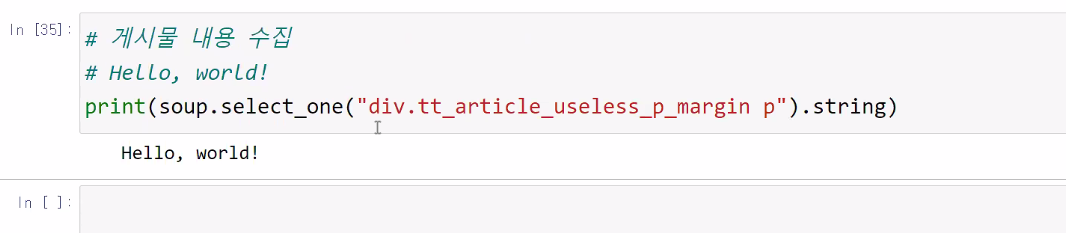

크롤링 예제

f12 개발자 도구로 조회

동적 크롤링

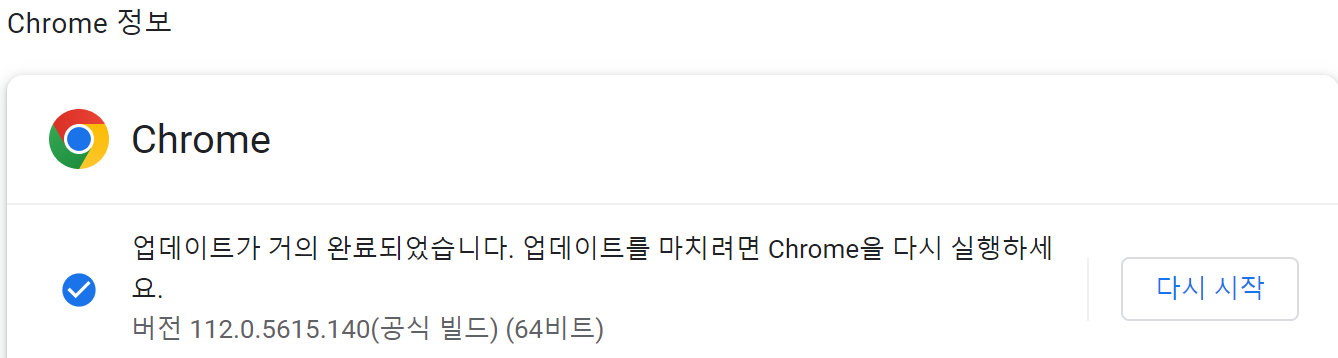

https://chromedriver.chromium.org/downloads

ChromeDriver - WebDriver for Chrome - Downloads

Current Releases If you are using Chrome version 114, please download ChromeDriver 114.0.5735.16 If you are using Chrome version 113, please download ChromeDriver 113.0.5672.63 If you are using Chrome version 112, please download ChromeDriver 112.0.5615.49

chromedriver.chromium.org

내 크롬 버전과 맞는 버전을 다운로드 받는다.

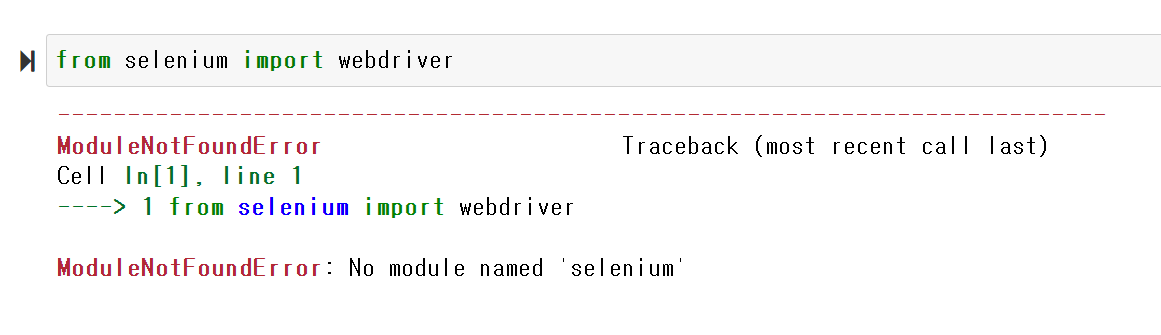

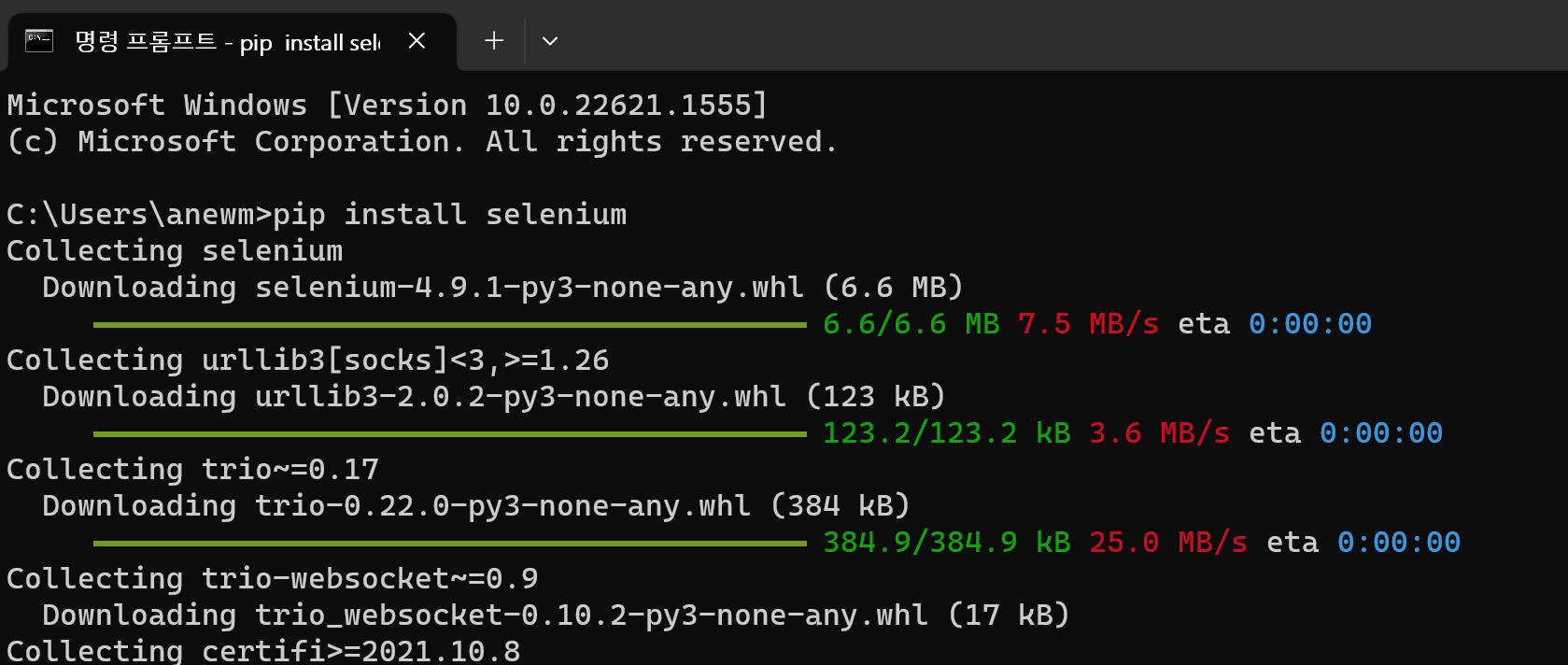

셀레늄이 시작되지 않는다.

cmd창에 pip install senium을 입력한다.

#Selenum으로 Dom에 접근하는 방법

단일 객체 반환(bs4의 find()와 같은 형태)

find_element

리스트 객체 반환(bs4의 find_all()과 같은 형태)

find_elements

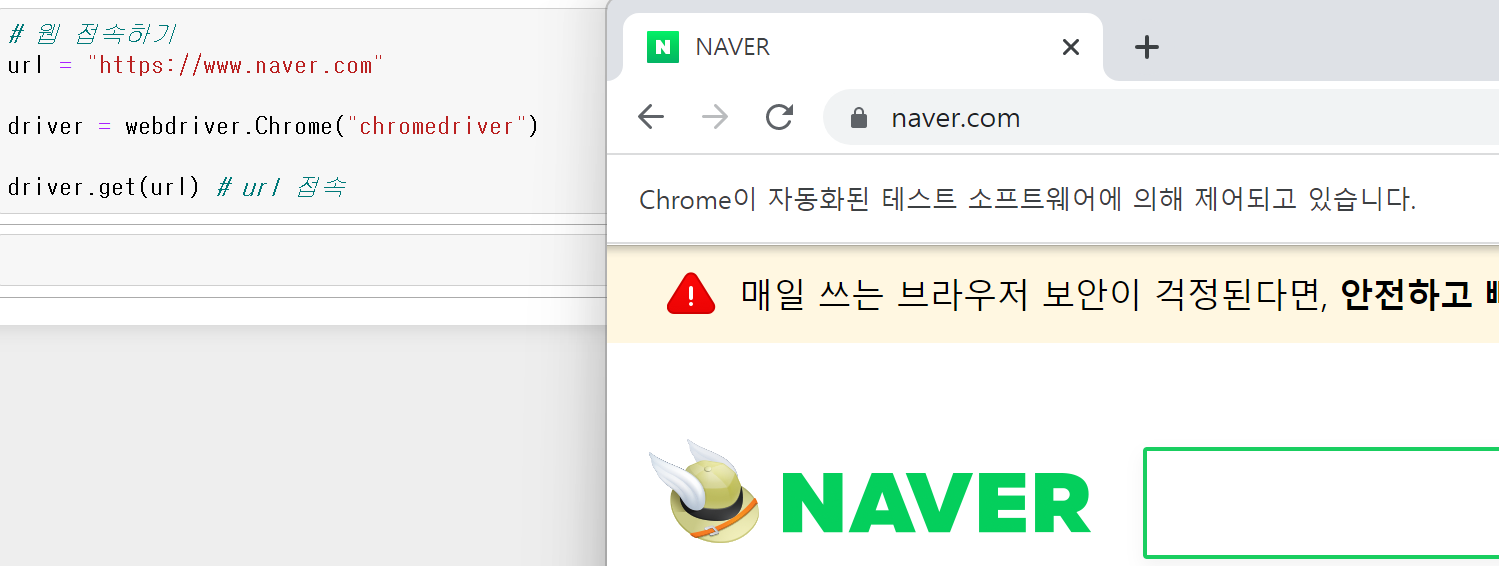

# 웹 접속하기

url = "https://www.naver.com"

driver = webdriver.Chrome("chromedriver")

driver.get(url) # url 접속

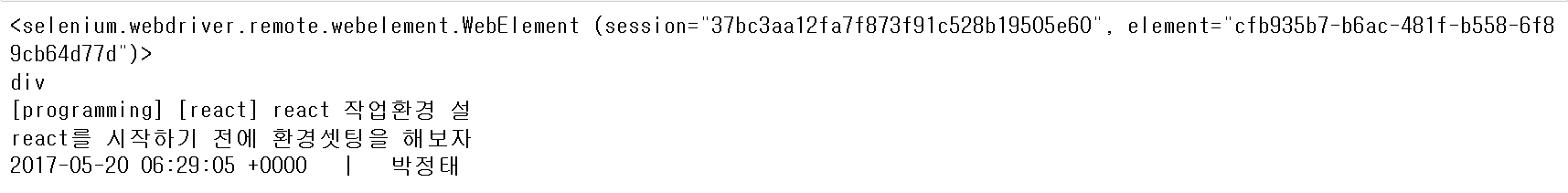

## css_selector

bs4의 select()와 동일

url = "https://pjt3591oo.github.io"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

selected = driver.find_element(by = By.CSS_SELECTOR, value = "div.p")

print(selected)

print(selected.tag_name)

print(selected.text)

selected = driver.find_elements(By.CSS_SELECTOR, "div.p")

print(selected)

없는 요소 접근

-bs4 와는 다르게 없는 요소에 접근하면 에러를 띄움

no such Element Exception

마우스 제어

url = "https://pjt3591oo.github.io"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

selected = driver.find_element(by = By.CSS_SELECTOR, value = "div.p a")

print(selected)

print(selected.text)

selected.click()

오류 페이지

메인 페이지에서 돔트리를 불러오고 다른 페이지로 넘어가면 그 전에 가져온 페이지는 사용할 수 없게 됨

따라서 click을 페이지 이동 용도로 사용하는 것은 가급적 피하는 것이 좋음

페이지 변화 없이 페이지 내에서 데이터가 변화되는 경우에 사용하는 것을 권장

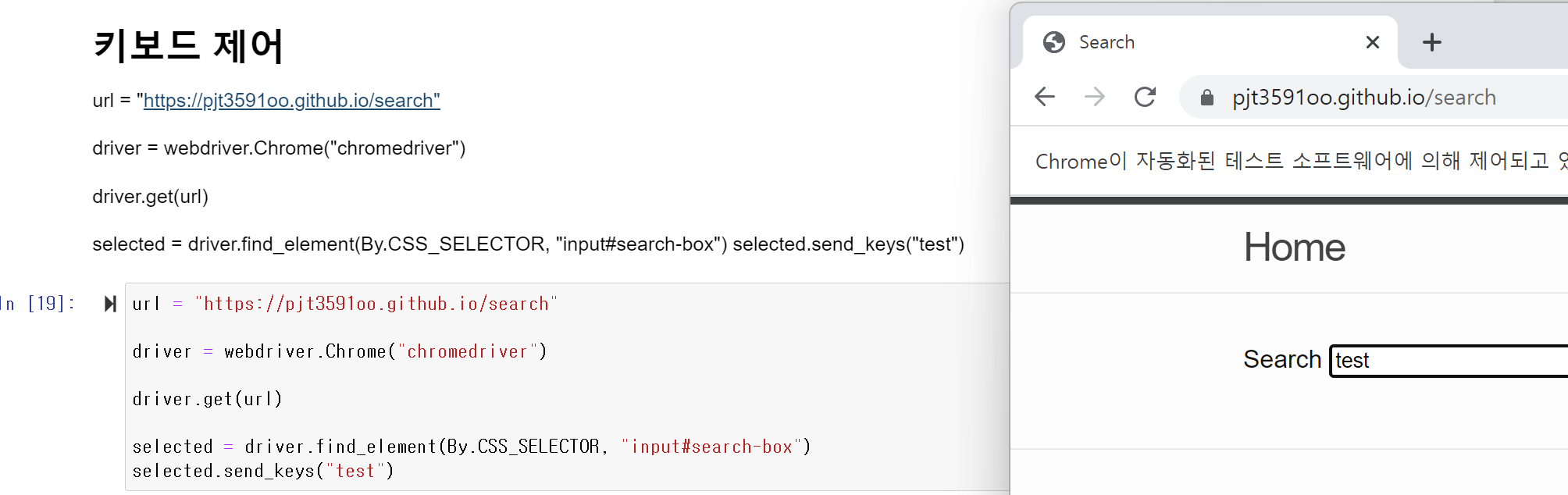

키보드 제어

url = "https://pjt3591oo.github.io/search"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

selected = driver.find_element(By.CSS_SELECTOR, "input#search-box")

selected.send_keys("test")

엔터키

selected.send_keys(Keys.ENTER)

selenium과 bs4의 조합

page_source : 현재 웹 브라우저의 HTML 코드를 가져옴

url = "https://pjt3591oo.github.io"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

soup = BeautifulSoup(driver.page_source, "lxml")

print(soup.select("div"))

url = "https://pjt3591oo.github.io/search"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

selected = driver.find_element(By.CSS_SELECTOR, "input#search-box")

selected.send_keys("test")

selected.send_keys(Keys.ENTER)

soup = BeautifulSoup(driver.page_source, "lxml")

items = soup.select("ul#search-results li")

for item in items:

title = item.select_one("h3").text

description = item.select_one("p").text

print(title)

print(description)

예제 # 네이버에서 고슴도치 검색 후 고슴도치 지식백과 접속

방법 1

# 네이버에서 고슴도치 검색 후 고슴도치 지식백과 접속

url = "https://www.naver.com/"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

driver.implicitly_wait(3) # 묵시적 대기

search = driver.find_element(By.CSS_SELECTOR, "input#query")

search.send_keys("고슴도치")

search.send_keys(Keys.ENTER)

post = driver.find_element(By.CSS_SELECTOR, "a.area_text_title")

post.click()방법 2

url = "https://www.naver.com/"

driver = webdriver.Chrome("chromedriver")

driver.get(url)

driver.implicitly_wait(3) # 묵시적 대기

search = driver.find_element(By.CSS_SELECTOR, "input#query")

selected.send_keys("고슴도치")

selected.send_keys(Keys.ENTER)

selected = driver.find_element(By.CSS_SELECTOR, "div.title_area a")

selected.click()

묵시적 대기

driver.implicitly wait(3) # 최대 3초를 쉼

import time

time.sleep(1)

'📂웹 개발(Web) > 🐍파이썬(Python)' 카테고리의 다른 글

| 파이썬 웹 크롤링 Beautiful Soup (2) | 2023.05.09 |

|---|---|

| 파이썬 json (0) | 2023.02.08 |

| 파일복사/csv파일 읽기 쓰기 (0) | 2023.02.08 |

| 파일 만들고 읽기 (0) | 2023.02.06 |

| 지역변수(local) 전역변수(global) (0) | 2023.02.01 |